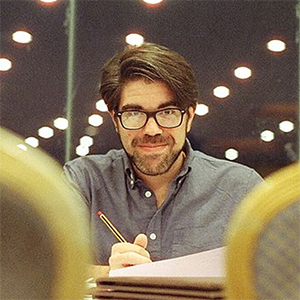

Eryk Salvaggio, known as User:Owlsmcgee on Wikipedia, is Brown University’s Visiting Scholar. Thanks to this sponsorship by the John Nicholas Brown Center for Public Humanities and Cultural Heritage, Eryk has remote access to academic sources to help him improve articles. Here, he reflects on one particular article that was made possible by this Visiting Scholars connection.

“Lol, computers can’t be racist.”

This comment, or a rough version of it, appeared to me sometime last year during an ill-advised political discussion on Twitter. It was the type of comment that social media has made us all very good at: reductive, simplistic, and glib. But when I turned to my regular source of internet-argument-winning evidence — Wikipedia — I was shocked to discover that nobody had assembled anything on the topic of how bias can infiltrate the way humans process and respond to information.

Plenty of evidence existed to show this was the case. It’s fairly simple to understand: data is entered into algorithms by humans, and humans make mistakes. They also make omissions based on subjective criteria: which information is valuable, and which information isn’t? There was a particular irony to finding this missing from Wikipedia, after all.

While lots of information about this form of bias had been published in academic journals and in the popular press, nobody had assembled it into a Wikipedia article. So I decided I would. I’m fortunate enough to be a Wikipedia Visiting Scholar for Brown University’s John Nicholas Brown Center for Public Humanities and Cultural Heritage. In my role there, I’ve been able to focus on topics that reflect aspects of American history that had been overlooked on Wikipedia, specifically, topics related to the history of oppressed populations in the United States. I’m proud to have created articles on black and transgender cowboys, the role of the Texas Rangers in the oppression of Mexican-Americans in the early 20th Century, and the surprising racial laws that defined Oregon’s early history.

By creating the article on algorithmic bias, I was able to connect my work reading about biases of the past that, without careful reflection and consideration, could become inscribed into our future.

As a Visiting Scholar I was given access to publications from Brown’s library, tapping into scholarly journals that would otherwise be locked behind paywalls. I was able to find studies of algorithmic bias dating back to the early days of computer programming. We tend to talk about algorithmic bias as a cutting edge problem, one that has emerged from a newfound reliance on social media feeds, machine learning, and artificial intelligence.

In fact, the problem has been well known, discussed in computer guides as early as 1976. And for four years, a computer program systemically denied women and ethnic minorities from a medical school in Britain, based entirely on a faulty reading of data. Historically low admission rates were handled as evidence against women and minorities by the algorithm, which automatically rejected applicants based on that criteria. That was in 1982.

You might think that this case, nearly 40 years ago, would have been a wake-up call for the design of computer systems. But as we hand more data over to machines to process, analyze, and report, we lose sight of what connections those machines are making. The data itself could be flawed. Categorizations, made by humans, reflect biases. These categorizations are what computers use to make decisions — and often, without any explanation of how those conclusions were made.

My work as a Visiting Scholar has taught me a lot about how racial bias can be inscribed in surprising ways. Wikipedia is no different. Written largely by white, Western men — men like me — the encyclopedia is notably lopsided against topics related to the history of anyone else. That lack of knowledge influences our conversations in America: an implicit bias. I saw my work as a Visiting Scholar as helping to contribute a broader understanding of how bias works in America.

By extension, being able to finish my work as a Scholar with an article on Algorithmic Bias — drawing from more that 80 sources, pulled from academic journals, surveys, research, and reliable news sources — reflects the kind of biases that still affect our society. I hoped the article would show — in a neutral, clear way — how these biases can be inscribed into technology, if we don’t pay attention to that history.

Since publishing the article, I’ve been privy to a bit of confirmation bias myself. Many of the examples from the Wikipedia article have appeared in mainstream journalistic explanations of the risks of algorithms — examples pulled from research I can only imagine wouldn’t have been discovered if it hadn’t been outlined first on Wikipedia. I’m pleased if the article is able to bring my work looking at the past into how we think about the future. It’s a great way to conclude my year as a Wikipedia Visiting Scholar!

For more information about the Visiting Scholars program, visit wikiedu.org/visitingscholars or reach out to contact@wikiedu.org with questions. To see more of the great work Eryk has done, check out the Dashboard.

This is awesome. Thanks for your work.

Incredible research and insight! Brown University must have been thrilled to have found you! We need more unbias consciencious researchers like him to advance society forward!