ChatGPT is the internet’s shiny new toy. It’s also a potential shortcut for students to quickly generate essays and other writing assignments — which has many educators rethinking their assignment designs. (For some, that means trying to AI-proof their writing assignments; for others, it may mean teaching students how to use a text-generating AI conscientiously as a writing tool.)

How does ChatGPT work?

ChatGPT is built on top of the GPT-3 language model, a machine learning model designed to predict (to simplify it slightly) the next word in a text. It does this by analyzing a very large amount of text data, and calculating probabilistic relationships between words in a sequence. It’s designed, in other words, to produce the same patterns of word use that are present in the datasets used to train the model. (In a recent, brilliant essay, science fiction writer Ted Chiang describes ChatGPT as “a blurry JPEG of the web”.)

GPT-3 is being developed by OpenAI, a for-profit company that, as of 2023, is funded primarily by Microsoft. (The name is a legacy of the company’s origin as a not-for-profit research lab, but it transitioned to become a for-profit in 2019, and GPT-3 and its other products are not open source.) According to OpenAI researchers who have published about GPT-3 and its training process, the system was trained on five main datasets:

- CommonCrawl, a publicly available, broad-ranging corpus of text scraped from the open internet, contributing about 66% of the training;

- WebText2, a more curated set of text scraped from the internet, derived from URLs that were submitted to Reddit and got at least a few upvotes and more upvotes than downvotes, contributing about 22% of the training;

- Books1 and Books2, a pair of “internet-based books corpora”, the details of which are not public, contributing about 16% of the training;

- English Wikipedia, which is much smaller than any of the other datasets, but was the highest quality dataset used in training and was weighted more heavily relative to its size, contributing about 3% of the training.

So those text datasets are what GPT-3 “knows”. ChatGPT adds several features on top of that, including a system for handling natural language as a prompt, the ability to refine output by replacing only specific parts of it, and “fine-tunings” that focus its output on chat-like responses.

The release notes for a recent update also tout “improved factuality”, but I haven’t been able to find any discussion of what that means or how the system accounts for the concept of “factuality”. The core GPT-3 system, as a language model, does not rely on structured data (like Wikdata, or the “knowledge graph” projects at Google and Amazon that incorporate Wikidata and power their virtual assistants). ChatGPT’s failure modes are often what AI researchers have termed “hallucinations”, seemingly coherent statements that, upon closer inspection, are fabrications, self-contradictions, or nonsense.

What about Wikipedia?

Using ChatGPT and similar large language models to create Wikipedia content is (as of early 2023) not prohibited on Wikipedia. Some editors have started drafting a potential guideline page that spells out some of the risks of doing so as well as advice for using them effectively. (In its current form, that proposal would also require that editors declare in their edit summaries whenever they make AI-assisted edits.) Some experienced Wikipedia editors have found ChatGPT to be a useful tool to jump-start the process of drafting a new article, especially for overcoming writer’s block. (You can read about these experiments here.) In this context, editors handle sources the old-fashioned way: combing through the text, editing it as needed, throwing out anything that can’t be verified, and adding inline citations for the things that can be verified.

ChatGPT will happily generate output in the style of a Wikipedia article, and indeed it does a pretty good job of matching the impersonal, fact-focused writing style that Wikipedians strive to enforce. It “knows” what Wikipedia articles sound like, perhaps in part because one if its training datasets is exclusively Wikipedia content. However, the relationship between text and citations — core to how Wikipedia articles are structured — is not part of the equation.

In its current iteration, ChatGPT will typically produce a bulleted list of sources at the end the Wikipedia article you ask for (if you explicitly ask for references/sources/citations). However, even more so than the article body, the source list is likely to consist of so-called hallucinations: plausible-sounding article titles, often with plausible publication dates from real publishers and even URLs, that don’t exist. When sources do exist, they don’t bear any specific relation to the rest of the output (although they might be relevant general sources about the topic that could be used to verify facts or identify hallucinations within the article).

For topics that don’t already exist on Wikipedia but that have a commercial element, ChatGPT also has a tendency towards promotional language and “weasel words”. For these topics — especially if they aren’t covered in books — GPT-3’s relevant training data is likely to include a lot of the sorts of marketing material that make up a large portion of the web these days. (Of course, the unfiltered web has a huge quantity of promotional garbage that was created by less sophisticated automation tools and/or churned out by disaffected pieceworkers.)

Copyright is another danger zone. So far, OpenAI has staked out a position that essentially says that users can do whatever they want with the output ChatGPT produces based on their prompts; they aren’t attempting to claim any copyright of their own on the output. However, ChatGPT frequently produces text that doesn’t come close to passing the plagiarism smell test, because it comes too close to the content and structure of some specific published text that was part of its training data. (Tech news site CNET was recently caught posting AI-generated content that amounted to close paraphrasing of real journalists.) The Wikipedia community takes copyright and plagiarism very seriously, but it’s hard to guess how the kinds of close paraphrasing that comes out of AI systems will affect conventional understandings of originality, copyright, and the ethics of authorship.

What about Wikipedia writing assignments?

Easy access to ChatGPT means that we’re very likely to start seeing Wikipedia content that editors in our Student Program contribute that was drafted by generative AI. (If any of it happened last term, it’s slipped under our radar so far.) Wiki Education staff are not sure what to expect, but we do want to make sure that students aren’t filling Wikipedia with ChatGPT-written nonsense or plagiarism. (That would be both harmful to Wikipedia in general, and also devastating to our relationship with the Wikipedia community and our capacity to provide free support for these Wikipedia writing assignments.)

Some educators are excited about exploring AI tools as part of the writing process, and have plans to incorporate it into their teaching. If you’re considering doing this as part of your Wikipedia assignment, we’d like to talk with you about it. (Please don’t do it without letting us know!)

Others are hoping to keep students from using ChatGPT. The typical failure modes and pitfalls of at least the current iteration of it mean the best things you can do are the same ones you’re already doing for your Wikipedia assignments:

- Use your subject-matter expertise to provide feedback — especially as students prepare their bibliographies *before* they start writing.

- Review what your students are drafting and posting to Wikipedia, and provide feedback to help them draft accurate and clearly-written text.

If you think it’s an important topic for your students, we suggest you also have a frank conversation with them about ChatGPT, how it works, and its potential for causing harm to Wikipedia. And if you do find that any of your students used ChatGPT, let us know — whether they conscientiously edited it into good, fact-checked content or not. We want to know how ChatGPT plays out in your classes.

Thank you for posting this article, Will. One of the concerns that I am hearing about ChatGPT from the institutional side of things is that it collects information about student users, raising privacy concerns – even leaving aside its functions in generating content and the issues of authorship associated with its text. Given how it may collect information about users, will it be ethical for educators, then, to ask students to sign up for it and engage in the context of classes?

Thanks Heather! My understanding is that it’s collecting much of the same kinds of information that other Microsoft, Google, Amazon and Facebook tools collect; just about everywhere you go on the internet, if you’re logged in to one of those sites or if you are using the same IP address as when you previously logged into one of them, will be feeding into ad-tech user profiles. I think these data collection systems are unethical systemically, but I don’t usually think of them as especially problematic at the individual level, since it tends to be just one more stream of input for a computer user who is already under ubiquitous commercial surveillance. (Maybe there are more specific ethical concerns around the way ChatGPT collects user data that I’m not aware of.)

I think that this article is great with how informative it is about ChatGPT and how students use it!I like how open you are with ChatGPT by allowing some students to experiment with it and tell you so that you can see how the articles play out 🙂

I enjoyed this article because it was really informative. I did not know much about ChatGPT, but this informed me. I also learned that ChatGPT is not prohibited on Wikipedia, as of right now, and I thought that it would be.

As i am writing this comment chat GPT as released a newer of the called GPT-4, which is supposably more advance and can produces higher quality f date. In my opinion, the world of AI is evolving and we should not mistake it as a replacement for education. Us as human need to development mentally on our own and having an AI this disposable is not all good.

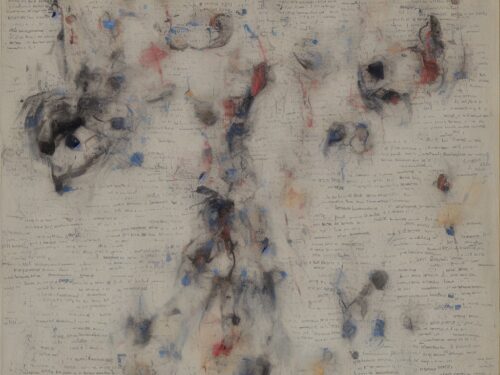

ChatGPT seems like a very resourceful tool when used in the correct manner; as mentioned in the article, using ChatGPT for jump starting ideas, helping with writers block but, it shouldn’t be used to do all of the work for you. It is an interesting thing, I have used an AI platform which allowed me to type in words which the website the generated them into an art piece; though it is obviously different from ChatGPT itself, it is something you put little to no effort into then technology does it for you. It is kind of scary to see what technology can already do and it still enhancing.

I agree, I think that ChatGPT can be used to help you start your article drafts, but there are some risks that come with it.

I think the use of chat-gpt and other ai sources is an interesting idea. The two paths of students shouldn’t use it and students should reminds me a lot about how people used to say that you wouldn’t have a calculator in your pocket at all times only for that to be one of the many functions now done by phones. So only time will tell where this not having to write will end up. maybe this entire comment was a prompt I made an AI do. You would never know?

I for one find chatGPT remarkable at finding sources on the internet, but I prefer to refrain from its use. It should only be used as a reference as to how to shape up your writing and generate sentences. Sort of like a crutch so students can become more proficient at writing on their own and not limit themselves to copying what chatGPT produces for them.

In June 2023, I began using ChatGPT to seek answers to various inquiries but found the responses unsatisfactory. I then shifted gears, requesting assistance in refining my English. I iteratively revised several segments, particularly focusing on financial and military history. Inquiring about preferred formatting—chapters, sections, subsections, paragraphs, or sentences—I was advised to structure content at the sentence level.

A moment that left a lasting impression was when I asked, ‘Does rasputitsa relate to the permeability of chernozem clay soil? Is rasputitsa specific to the chernozem region?’ I was convinced of its relevance, given that this connection had yet to be mentioned in the article. Subsequently, I disclosed that English isn’t my native language; I’m Dutch and an active Wikipedian. I requested text improvements in a Wikipedia-style, maintaining a business-like tone, avoiding excessive embellishments, and refraining from altering quoted text. (I was surprised that this detail wasn’t known.)

Throughout our interactions, I never sought entirely new information; rather, my goal was to enhance my English. I personally provided the text and, in the interim, made several revisions to certain introductions, albeit not entire articles. Working with ChatGPT demands a significant amount of concentration, prompting considerations on what changes were made, what remained unaltered, and whether I concurred with the modifications.