How do you describe things? Consider what you’re reading right now. Is this a blog post? Is it an article? Is it just a collection of sentences? Or is it a series of thoughts? I recently wrapped up a successful machine learning project as part of a capstone project at the School Data Science at the University of Virginia (UVA). Using data science (and a lot of data) this project began to answer the question of how machine learning can assist in describing and classifying things.

Background

Master’s degree Students at the School of Data Science at UVA complete a capstone research project to gain experience and demonstrate proficiency in interpreting real life industry data, processing data with machine learning techniques, recognizing when to use computing resources, and applying data science theory from the program to reveal new insights, address existing issues, or discover ways of overcoming traditional challenges.

Since the fall of 2020 I have been working with four students — Jonathan Gómez, Thomas Hartka, Binyong Liang, and Gavin Wiehl — on this research project to improve the interconnectedness of Wikidata’s content for benefit of the Wikidata user community itself.

One of the ambitious goals of Wikidata is to describe the world through linked data. A common aspect of description is defining what things are. This concept is called basic membership. Wikidata items express basic member first among other properties by classifying every item as either an “instance of” or “subclass of” some more general concept. You can think of “instance of” as describing a specific thing like Georgia O’Keefe’s “Lake George Reflection” is an “instance of” a “painting.” Subclasses describe categories of things, so for example, “painting” itself is a subclass of “visual artwork”. Simple enough, right? The important thing here is to sort concepts in Wikidata to classify them in a place that makes sense. This kind of hierarchical arrangement makes searching and discovery easier for both humans and machines (think scripts and code). Combined with other Wikidata information, one can make general reference search requests like for example, “show me all the paintings by a particular artist”.

The challenge

But back to our challenge: how do you describe things? If we’re talking about types of things (paintings, sketches, and sculptures are all creative works of visual art), Wikidata uses a property called “subclass of” (P279 to superfans) to connect these concepts. A class of anything on Wikidata can be expressed with P279. This makes traversing hierarchies and taxonomies simple since you only have to use one property (P279, hey superfans!). The difficult thing is standardizing its use.

Wikidata is a global, multilingual project that seeks to classify every concept for which there is a Wikipedia article as well as many other concepts, beyond Wikipedia, which are useful as general reference information. While Wikipedia has articles for famous and documented topics including artworks, politicians, and geographical features, Wikidata also is documenting these same sorts of topics, but with completeness including less famous and documented topics (so far in this post I’ve mentioned artworks, but I’m sure you can think of more kinds of things). So how do you teach a growing community of users how to engage with classes? How do you know if a town is a town or if it’s a city or other form of municipal area? Also how do you know you’re using the correct kind of class? Example: is a painting the most general class or is there something between painting and visual artwork? Visual representation? Artistic expression? Still life? What could I possible put for P279!?

This is a complex question with no straightforward answer. The research team for this project came up with a clever way to begin answering this question. What if we could analyze millions of descriptions that exist for items to make a recommendation for a class? What if we knew other things about what we were describing and from those “hints” we could settle on an accurate class? Put another way, what if we could abstract it from there and have just a series of relationships and try to extrapolate what a thing might be? Or compare it to a series of similar things to see if there’s a good match?

This is what this group did.

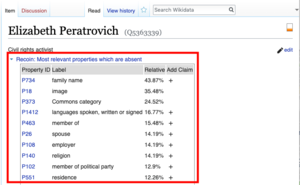

Wikidata superfans out there, you may be familiar with a popular Wikidata tool called Recoin. Recoin is a tool that makes statement recommendations based on “instance of” or “subclass of” properties. This project differs (quite significantly) from Recoin in that this group studied the reverse. After downloading and ingesting all of Wikidata, the team used machine learning technology to analyze items with and without subclass statements, then directed the algorithm to guess what subclass items ought to have. Preliminary checks by humans found that the guesses were mostly correct. Without getting bogged down in details, this finding is significant because it sets the stage for more domain-specific analysis (for example: is this a painting?). From there it wouldn’t be a stretch for other groups or community members to create the class-improvement tool we imagined earlier in this post.

The importance

Let’s circle back to why all of this matters. First, classifying things is important for discovering things. Second, misclassifying things can be problematic, inaccurate, degrading, and possible hurtful to individuals and the quality of Wikidata’s data. Third, Wikidata is machine readable. Using machine learning on Wikidata’s data to improve it does happen, but could enhance many aspects of representing the world as linked data very quickly thanks to batch edits. And fourth, machine learning can benefit from using Wikidata’s data. The analysis in this research project processed more than 5.4 billion triples. Access to this amount of orderly, free and open, non-profit motivated, community-curated structured data is rare. The idea that such data could be transparently quality controlled and easy to obtain is radical. This data won’t work for every kind of project, but having a structured dataset at your fingertips can allow for some very cool things to happen with machine learning.

If you’re interested in technical details their paper is “Context Matrix Methods for Property and Structure Ontology Completion in Wikidata.”

A special thanks to the amazing team, coordinators, and professors at the University of Virginia:

- Jonathan A. Gómez, jag2j@virginia.edu

- Thomas Hartka, trh6u@virginia.edu

- Binyong Liang, bl9m@virginia.edu

- Gavin Wiehl, gtw4vx@virginia.edu

- Lane Rasberry, Wikimedian-in-Residence

- Rafael Alvarado, Professor

Image credits: Will (Wiki Ed), CC BY-SA 4.0, via Wikimedia Commons; Georgia O’Keeffe, Public domain, via Wikimedia Commons; MediaWiki authors, CC BY-SA 3.0, via Wikimedia Commons